How I Built an SEO Analysis Tool with AI Agents

High-level look at building an SEO analysis tool with GitHub Copilot and Claude Sonnet 4 - what worked, what broke, and why AI speed still needs human direction.

AI is quickly reshaping the way we build software. Instead of spending hours writing boilerplate code, we can now direct AI agents to generate, refine, and even run code on our behalf. Recently, I decided to put this to the test.

Can I build a functional SEO analysis tool almost entirely with AI agents like GitHub Copilot and Claude Sonnet 4?

The answer: yes - with some caveats. Here’s how it went.

👉 This was initially recorded as a video, since the step-by-step process is often better explained visually: Watch AI Agents Build an SEO Tool with GitHub Copilot

Starting from Zero

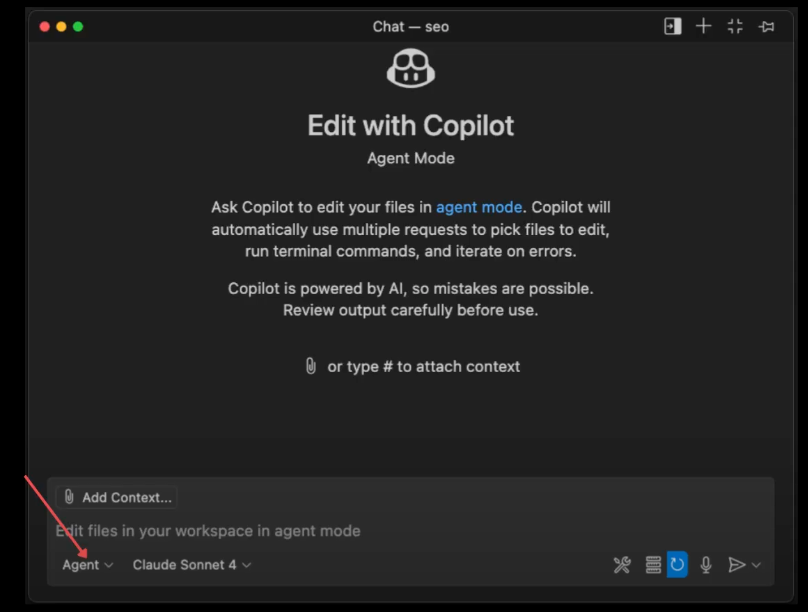

I began with an empty directory in VS Code: no pre-written snippets, no starter templates. Just me, my development setup, and AI agents switched on.

The idea wasn’t to craft every function myself, but to see how much I could lean on AI. My role would be to guide, review, and occasionally intervene when things went off track.

Letting AI Define the Requirements

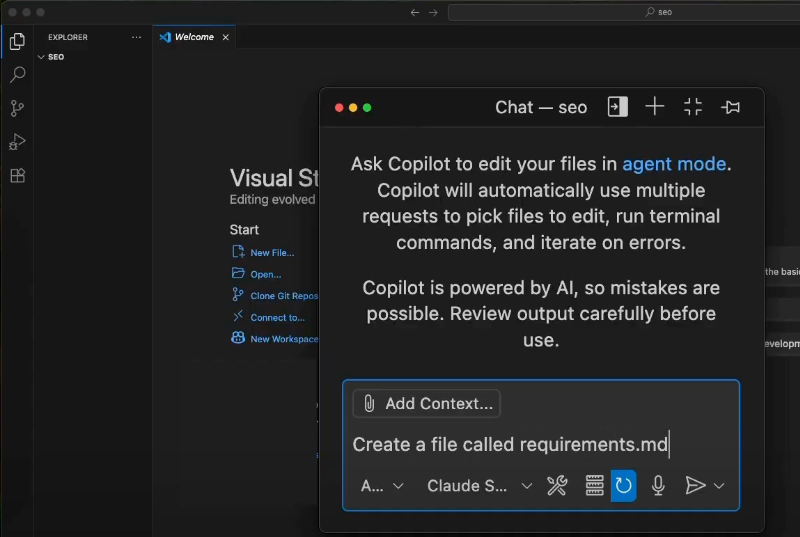

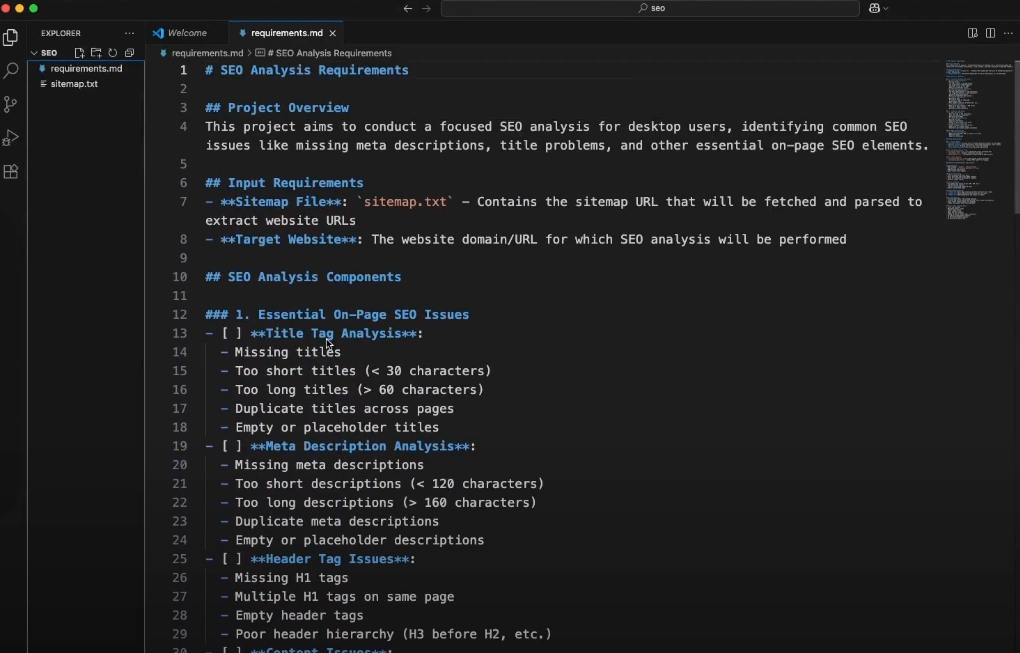

Instead of jumping straight into code, I first asked Copilot to generate a requirements file. This step turned out to be crucial.

Why? Because requirements act like a blueprint. By having AI write them down first, I could refine the scope and priorities before any code was generated. It also meant that Copilot could refer back to a clear document rather than rely on its fuzzy memory of my prompts.

The AI’s first pass was ambitious. It included everything from mobile responsiveness checks to full-blown reporting features. Useful, but not practical for my purpose. I guided it to narrow the scope to something simpler: crawl my website via its sitemap, check for broken links and images, and produce a clean HTML report.

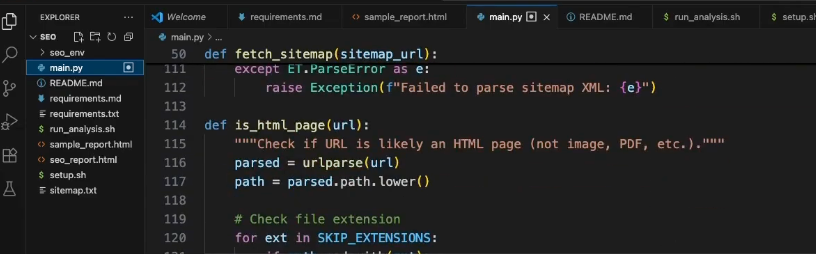

From Blueprint to Code

Generating a sample report

I wanted to ensure that it generated a report correctly and understood what I really wanted. So, I asked it to create a sample HTML report. That way, there is less ambiguity when the complexity increases.

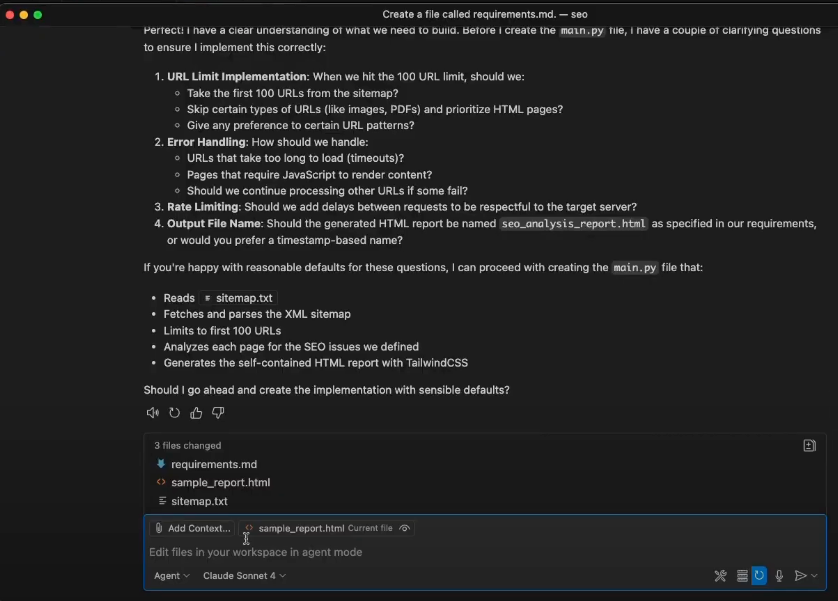

Making it ask questions

I felt I was missing something explicit in the requirements. Copilot works fast and increases your cognitive review load. I prompted Copilot to ask questions, and it came back with good ones before we even started writing code.

Once the requirements were set, I asked Copilot to generate the actual code.

To my surprise, it did more than just spit out Python functions. It also generated shell scripts and scaffolded the workflow in a way that suited my preferences (that may be coincidental). I didn’t always have to spell things out. Sometimes it just made reasonable choices on its own. Sometimes I had to ask it to do things differently, suited to better practices.

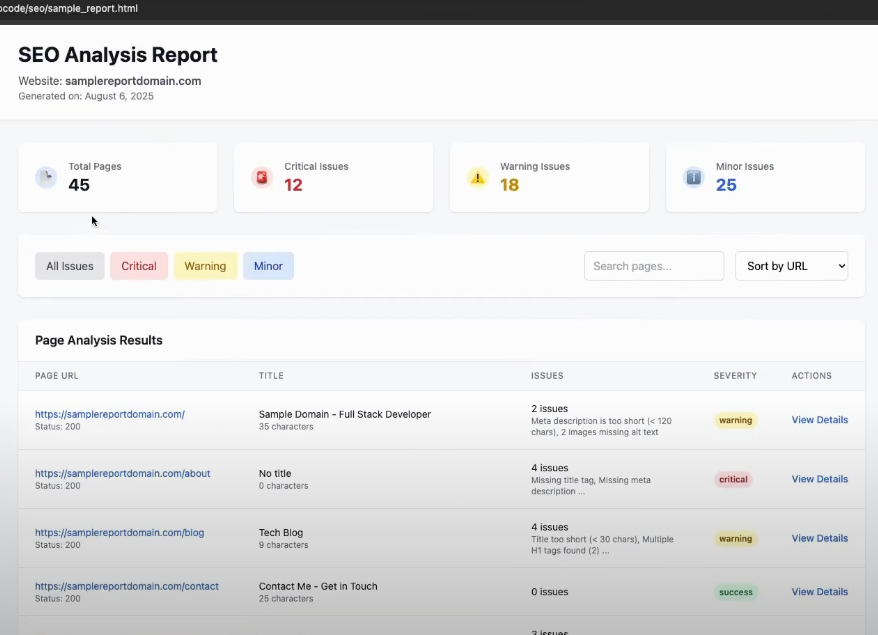

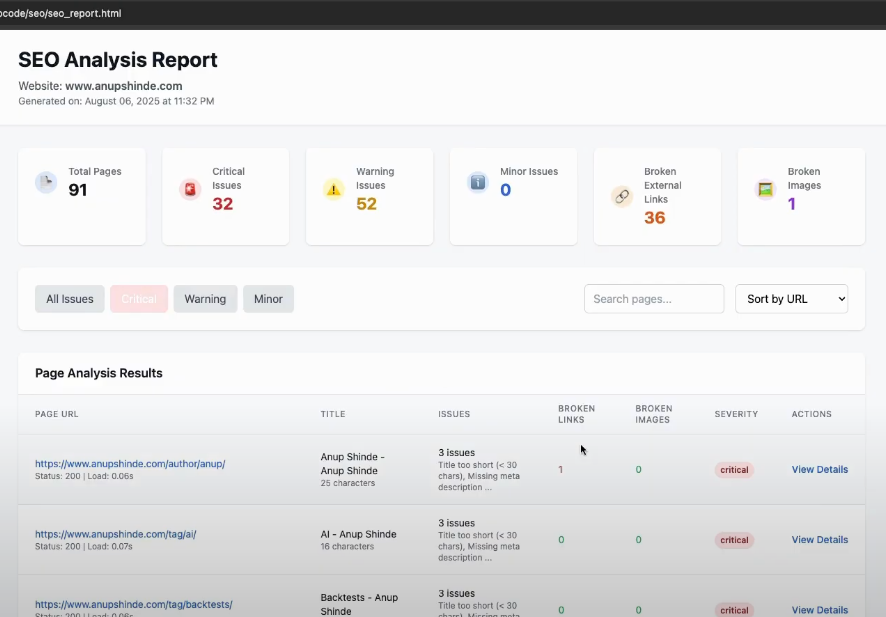

Of course, it wasn’t flawless. At first, the tool ignored deeper sitemaps. Then it began misclassifying forbidden HTTP errors as broken links. Later, its caching system turned out to be too limited. Each time, I nudged it back on course with minor clarifications. With those adjustments, I soon had a working tool producing reports I could actually use.

Final report generated after the crawl

Reviewing and Debugging

This stage was the most human-heavy. The AI could generate a lot of working code, but it still made mistakes and skipped details. I had to check whether reports were accurate, test how the caching strategy worked, and correct logical issues.

The fundamental dynamic here was clear: AI brings speed, I bring direction. It’s less about “replacing” the developer and more about shifting roles - from typing code line by line to orchestrating and validating what the AI creates.

Key Takeaways

- Start with requirements. Having AI generate and refine a requirements file first makes the whole process smoother.

- Expect to intervene. AI agents are powerful, but they misinterpret scope and logic. Human judgment is still essential.

- Leverage AI’s strengths. Let it handle repetitive coding, scaffolding, and boilerplate. Focus your time on oversight and testing.

- Treat it as a collaboration. Think of AI not as an autopilot, but as a very fast developer who needs constant guidance. Over time, you’ll also notice that the human developer learns the AI’s quirks and preferences - but the AI itself doesn’t really remember much between sessions (at least for now). That adds a cognitive load for you as the director/manager/developer.

- Encourage questions. You have to tell the AI to ask questions explicitly. It doesn’t matter whether your requirements are perfect or vague; if you don’t encourage questions, the AI may proceed with assumptions that waste time and tokens. If you do, you’ll often be surprised by how well it can clarify and improve the work.

When using AI agents, keep track of the tokens or credits being consumed.

Once, when I used a similar but detailed workflow with unit tests, the AI agent got stuck, kept going in circles (a giant loop), trying to fix the unit tests, consuming a lot of credits/tokens - even with a watchful human eye.

Final Thoughts

This demonstrates that AI agents are already capable of building practical, functional tools. My SEO analysis tool wasn’t perfect, but it worked. And it wasn't meant to be perfect; it was a one-time solution I needed for a website migration. But the process shows that agents make AI capable of doing much more.

More importantly, the process highlighted a new developer mindset: one where I act as a director and reviewer rather than just a coder. The AI provides speed, but I provide clarity, constraints, and accountability.

A day after recording the initial video, GPT-5 was launched with Preview enabled in GitHub Copilot. For anyone wondering how this fares against GPT-5, I'll say - GPT-5 may be slightly better at coding and reasoning, but my experience with it was "it's forgetful" and "expectations didn't meet the hype". The former part is something that can and should be fixed in the agentic development workflow, and further modularizing requirements can achieve this.

I also conducted a similar experiment using Copilot to transform requirements into user stories and tasks for a whole project. That was an AI-assisted project breakdown. Leave a comment below if you’d like me to elaborate on that one further.

Comments ()