Building AI Agents in Python

From simple rules to clarifying loops: explore this 3-part series on building AI agents in Python, with examples.

Over the last three articles, we walked through a progression of how agents evolve - from simple decision-makers to conversational partners that can clarify before acting. This summary ties it all together and points to what’s next.

Part 1: Simple Decision Agent

We began with the basics: an acting agent that follows strict rules. You provided it with structured input (ingredients, hunger level, cooking time), and it suggested a recipe—no ambiguity, no guesswork, but also no flexibility if the input wasn’t structured.

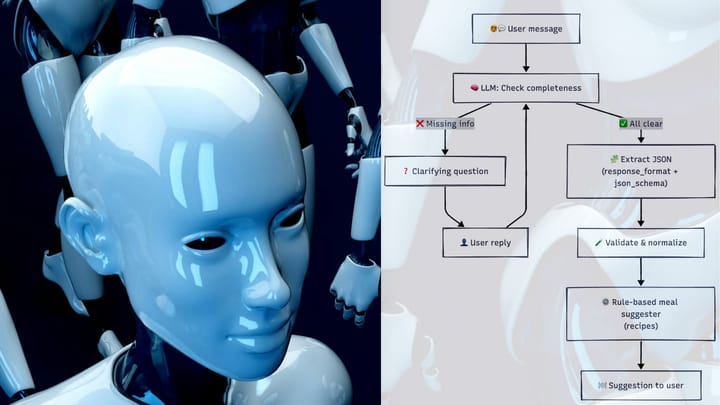

Part 2: LLM-Powered Agent

Next, we added a perceiving layer with GPT-5. Instead of rigid inputs, the user could type naturally (“I’m in a rush with some eggs and rice”), and the LLM translated that into structured JSON. The agent still acted based on rules, but perception became much more human-like.

This highlighted a key split:

- Perceiving agent → understands and structures messy input.

- Acting agent → applies logic or rules to that structured input.

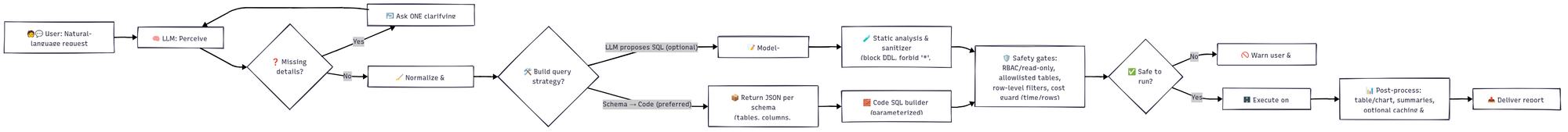

Part 3: Clarifying Agent

Finally, we introduced a clarifying loop. Instead of guessing vague values (“kinda hungry”), the agent asked back until it had complete information. This step is crucial because prompts can otherwise encourage the LLM to infer answers it doesn’t truly understand, which can lead to silent errors.

Prompting Challenges

Across the series, the biggest challenge was how much to let the model infer.

- Too strict, and the user experience feels clunky.

- Too lenient, and the model fills gaps with assumptions.

By explicitly prompting the model not to infer and designing loops for clarification, we made the agent more trustworthy.

Example Beyond Recipes: SQL Queries

It’s easy to see how these agent patterns apply outside the kitchen. One powerful scenario is interacting with your database using natural language.

How it might work

A user types: “Show me the top 10 customers by revenue last quarter.”

- The perceiving layer (LLM) translates this into a SQL query like:

SELECT customer_name, SUM(revenue) AS total_revenue

FROM sales

WHERE sale_date BETWEEN '2024-04-01' AND '2024-06-30'

GROUP BY customer_name

ORDER BY total_revenue DESC

LIMIT 10;

- The acting agent runs the query and formats the results as a neat table or even generates a chart (e.g., a bar graph of top customers).

- With a little more prompting design, the agent could also create automated reports: monthly summaries, trend analyses, or regional breakdowns, all triggered by plain-English questions.

This kind of workflow makes data more accessible to non-technical users, lowering the barrier to insight.

The Risks

But the same power comes with serious risks:

- Malicious prompts: Someone could say “DROP the users table” or “DELETE all rows from sales”, and unless guardrails are in place, like sanitizing the query, preventive prompts, and read-only access, the agent might generate destructive SQL.

- Accidental damage: Even without malice, vague wording like “clear old data” could translate into unsafe operations.

- Costly queries: A user could unknowingly ask for “all rows with full join across 10 tables”, causing a database crash or racking up a massive cloud bill.

That’s why clarifying loops is important, but they’re not the whole solution.

Before acting, an agent should also sanitize inputs and add guardrails. One approach is to ask for JSON-schema–restricted queries, then build the final SQL in code instead of letting the LLM write it directly.

The advantage: predictable, sanitized queries that reduce the risk of destructive commands.

The tradeoff: you lose some of the LLM’s flexibility in generating complex SQL.

How far you go depends on how capable your sanitization layer is and how much risk you can tolerate.

In short: natural language → structured query → safe execution, but only if the agent clarifies before acting.

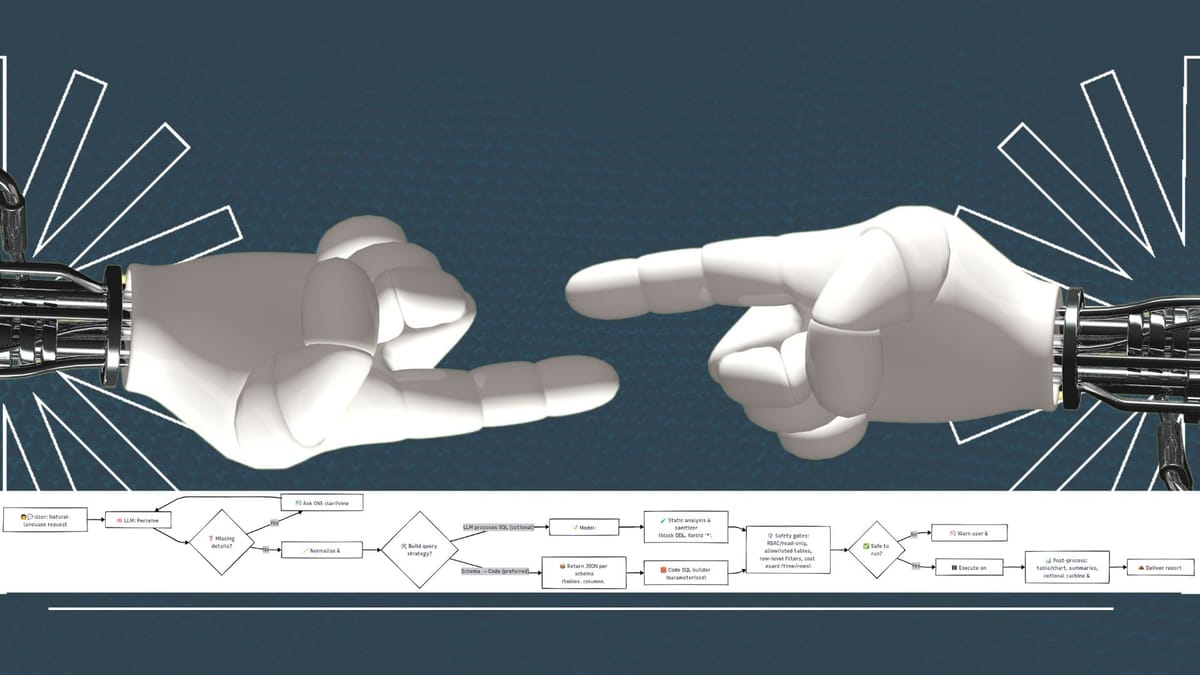

Hypothetical SQL Agent Flow

---

config:

layout: fixed

look: handDrawn

---

flowchart LR

A["🧑💬 User: Natural-language request"] --> B["🧠 LLM: Perceive intent"]

B --> C{"❓ Missing details?"}

C -- Yes --> D["↩️ Ask ONE clarifying question"]

D --> B

C -- No --> E["🧹 Normalize & validate inputs"]

E --> F{"🛠️ Build query strategy?"}

F -- Schema → Code (preferred) --> G["📦 Return JSON per schema<br>(tables, columns, filters, group_by, limit)"]

G --> H["🧱 Code SQL builder (parameterized)"]

F -- LLM proposes SQL (optional) --> I["📝 Model-generated SQL"]

I --> J@{ label: "🧪 Static analysis & sanitizer<br>(block DDL, forbid '*', cap LIMIT/OFFSET)" }

H --> K["🛡️ Safety gates:<br>RBAC/read-only, allowlisted tables,<br>row-level filters, cost guard (time/rows)"]

J --> K

K --> L{"✅ Safe to run?"}

L -- No --> M["🚫 Warn user & block action"]

L -- Yes --> N["🗄️ Execute on database"]

N --> O["📊 Post-process: table/chart, summaries,<br>optional caching & pagination"]

O --> P["📤 Deliver report to user"]

J@{ shape: rect}

Wrapping Up the Series

This 3-part journey showed how agents can grow step by step:

- Simple Decision Agent → rules only.

- LLM-Powered Agent → understands natural language.

- Clarifying Agent → asks questions until everything is clear.

The SQL example shows why this matters: without clarifications, agents can become dangerous. With them, they become trustworthy collaborators.

What’s Next: RAG and CustomGPT

This series covered the basics of agent design: rules → perception → clarification. But the story doesn’t stop here.

Next, we’ll explore:

- RAG (Retrieval-Augmented Generation): making agents smarter by pulling in external knowledge safely.

- CustomGPT: tailoring models to your domain with guardrails and personality.

Stay tuned

Comments ()